In the era of digital data explosion, the need to collect and process information from the Internet is becoming increasingly urgent. This is the time Web Scraping Serves as a powerful alternative to time-consuming and resource-intensive manual data collection methods.

So What is Web Scraping? How does it work and what value does it bring to individuals or businesses? Let's find out Hidemium Discover the important things you need to know before you start using this technology.

1. What is Web Scraping?

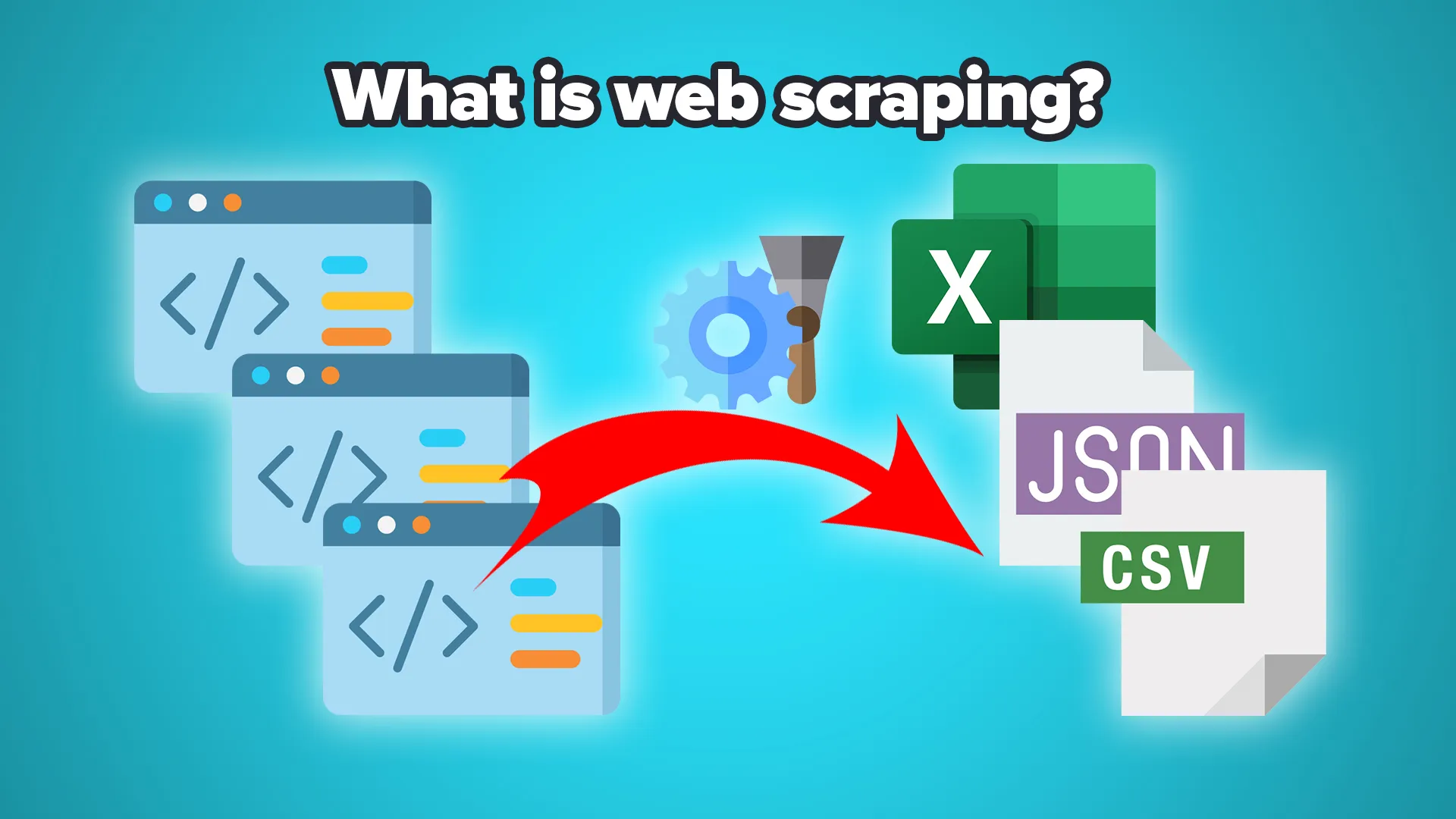

Web Scraping is the technique of automatically collecting information from websites through software or code called boots These boots will access the HTML source code of the website, extract the necessary data and save it as spreadsheet file, database, or integrated through API, serving purposes such as: market research, updating product data, competitor analysis, etc.

The tool that performs this process is called Web Scraper. Web Scraper is designed to scan and analyze the structure of a website, identify elements containing important information (e.g. prices, product names, article content) and automatically collect them according to predefined configurations.

>>> Learn more: What is WebRTC? Do websites collect WebRTC fingerprints?

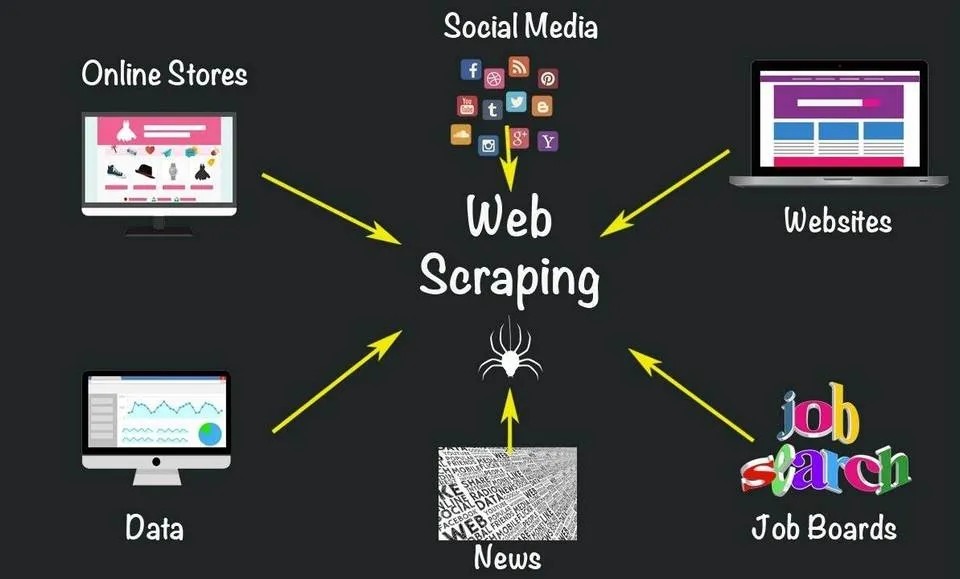

2. What is Web Scraping used for?

Web Scraping is a technique of collecting data from websites automatically, which is currently widely applied in many different fields. Below are the most common purposes of Web Scraping:

Collect market data: Helps businesses quickly access information about prices, customer feedback and consumption trends from e-commerce sites, effectively supporting Competitive analysis and market research.

Social research and analysis: Web Scraping tools can get data from online newspapers, forums, blogs or government websites to serve the purpose of evaluating trends, public opinion and user behavior.

Automatically update news: The system can continuously collect the latest news from reputable sources, helping users update information quickly without having to manually monitor each page.

Collect product and service data: In the field of e-commerce, using Web Scraper to get data from competitors helps businesses grasp the market and adjust product strategies effectively.

Optimize advertising and marketing campaigns: Information about customer and competitor behavior obtained through Web Scraping will be an important foundation for businesses to improve efficiency digital marketing.

Track and compare prices online: This tool helps users and businesses monitor product or service prices from multiple sources, making it easy to find the best price.

Multi-source data aggregation: Web Scraper supports data collection from multiple websites, creating a comprehensive data warehouse for in-depth analysis and business decision making.

Content Automation: The data collected can be processed to automatically generate content for websites, blogs or applications, saving time on manual content production.

>>> Learn more: How to recognize antidetect with good fake Webrtc function

3. Web Scraping Applications in Prominent Fields

According to statistics from LinkedIn in the US, Web Scraping Has been widely applied in more than 54 different fields. Below is 10 typical industries with the highest rate of Web Scraping usage:

Computer software – 22%

Information technology & digital services – 21%

Finance – banking – insurance – 16%

(including: financial services 12%, insurance 2%, banking 2%)Internet and online platforms – 11%

Digital Advertising & Marketing – 5%

Cyber Security & Information Security – 3%

Management Consulting – 2%

Digital Media and Publishing – 2%

This shows thatWeb Scraping is not only useful in the technology field, but also an important tool inCollect market data, monitor competitors, track trends, and automate user analytics in many different industries.

>>> Learn more: What is Pixel Tracking? 3 Most Common Types of Pixel Tracking

4. The most popular types of Web Scrapers today

Web Scraper is a tool that automatically collects data from websites. Based on technical criteria and user experience, Web Scraper can be classified as follows:

4.1. By construction method: Self-built and Pre-built

Self-built: Programmed exclusively in popular languages such as Python, Java or Node.js. This type requires users to have programming skills and in-depth understanding of web systems.

Pre-built (available): Are libraries and support tools such as Scrapy, BeautifulSoup (Python) or Puppeteer (JavaScript). Suitable for users who want to deploy quickly and do not need to build from scratch.

4.2. By deployment type: Browser extension vs Standalone software

Browser Extension: Is an extension integrated into the browser, allowing to get data directly from the website being visited.

Software: Are standalone applications, installed on the computer, capable of operating separately from the browser, often powerful and highly customizable.

4.3. By user interface: With UI vs Without UI

With UI: Has an intuitive graphical interface, easy to use for non-technical people.

Without UI: Operates via command line (CLI), requires programming skills and is suitable for advanced developers.

4.4. By data storage and processing location: Cloud-based vs Local

Cloud-based: Cloud-based tools that support flexible data processing and storage, scale on demand, and are independent of user devices.

Local: Install and run directly on personal computers. Users need to configure, maintain and be responsible for system performance.

>>> Learn more: What is a User Agent? How to change UA on 4 popular browsers today

5. How does Web Scraping work?

Web Scraping is the automated process of collecting data from websites, widely used in market research, price tracking, content analysis and many other purposes. To get started, you need to enter URL of the target website into the Scraper tool. The tool will then download the entire HTML code of the page – including JavaScript and CSS if necessary.

Users can select specific types of data they want to extract such as: product price, size, article title or detailed content. The scraper will then crawl the relevant pages to collect the corresponding information. If the website has a static structure, the data can be configured automatically. However, for most dynamic pages, the user needs to set it up manually due to the different HTML structures.

The collected data will be exported in popular formats such as CSV, Excel or JSON – ideal format for integration with API systems.

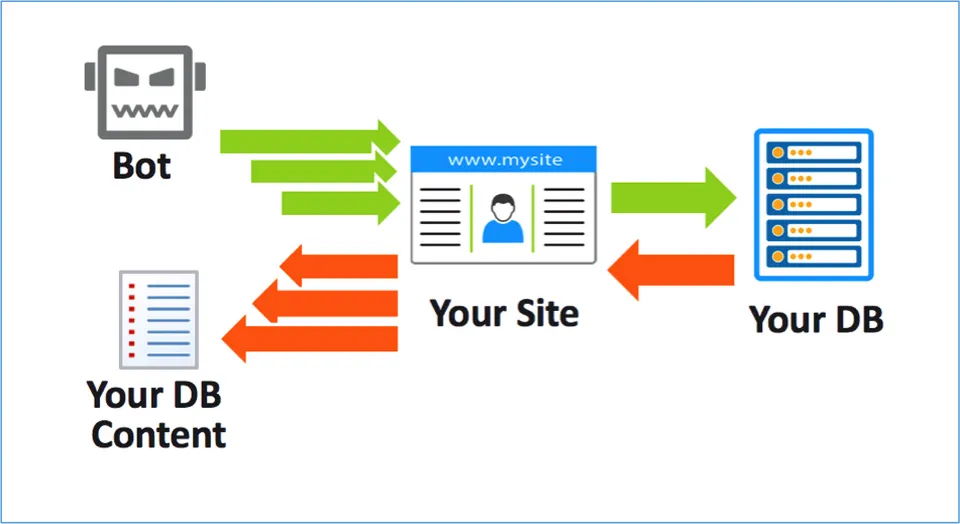

In spite of Web Scraping is a powerful tool for large-scale data processing and mining, but it is not always easy to deploy, especially for those who need it run multiple accounts or perform advanced automation. Many websites today have implemented security measures such as block IP, detect strange device, causing data collection to be interrupted.

Here is why Hidemium AntiDetect Browser becomes the ideal choice. Hidemium allows you to Manage multiple browser profiles, combined use Proxy to change IP address and device trace, help you bypass website security barriers effectively and safely.

In short, Web Scraping is a great way to collect information in the digital age, but it comes with important legal and ethical considerations. Always make sure that data collection is done legally. If you need assistance with tools or implementation, don't hesitate to contact us Hidemium for detailed advice.

>>> Related articles:

Related Blogs

More than three decades ago, eBay started as a small auction site where people could sell their old items to earn extra income. By 2025, the platform had become an e-commerce giant, trusted by thousands of sellers to grow their businesses. However, while eBay has been constantly improving its security systems to protect users, it has also implemented strict regulations – sometimes[…]

Whether you are a social media administrator, an affiliate marketer, or someone who needs to operate multiple accounts at the same time, antidetect browser is the ultimate solution to help each online profile act as an independent user. To optimize work efficiency and ensure safety, updating information about new anti-detection platforms is essential.In this article, we will evaluate it in[…]

Multilogin has affirmed its position thanks to its stable operation and has been on the market for a long time. However, this tool also attracts attention because of its high price and customized support mode according to customer size.Notably, with the latest Multilogin X update, Multilogin has made great strides, leading many to question whether this is a worthy investment. In this article, we[…]

The world of e-commerce and dropshipping is growing rapidly, with more and more businesses looking to take advantage of the opportunities presented by online sales. However, with this growth comes an increase in online fraud, which can lead to account locks, chargebacks, and other financial losses. To prevent these issues, many businesses are turning to […]

Automatic CAPTCHA solving is now a standard part of data scraping, web-service testing, and other automation jobs. CAPTCHAs were built to block bots. For developers and scrapers, they are just one more barrier. Anti-CAPTCHA services remove that barrier by mixing algorithms (OCR, neural nets) with human solvers when pure automation fails. This piece runs a technical comparison of eight services:[…]

.png)